Displaying items by tag: softwaremodernization

Modernize the code. Keep the brilliance.

How long would it take you to complete 25,000 tasks that take thirty minutes each?

Before you drag out your calculator, that’s 12,500 hours, or about 1,562 working days. Wouldn’t it be better to have it all done in under an hour? That gives you an idea of how refactoring—when done correctly—can accelerate modernization process and deliver accuracy in the range of just one error among 20,000 lines of code. That’s the kind of accuracy that’s needed for critical systems. Let’s talk about how refactoring achieves it so fast.

The refactoring phase of modernization at TSRI takes a project beyond updating the code and database to modern standards and system architecture. Sure, the planning, assessment, transformation, and integration phases of a modernization process get the mass of the work done. But more is needed. Imagine if a great painter only took a portrait to the point of getting the features in the right places. For functional equivalency, high performance, and future-readiness you need to dive into the details to get them as right as the original—and ready for future enhancements and needs.

That’s where refactoring can be hugely beneficial. If, after the transformation and integration phases you have to find and address each issue manually over hundreds of thousands or millions of lines of code, you may as well add those 12,500 or more hours back in. That’s why refactoring is key. By including an iterative code scanning and refactoring phase to the modernization process, TSRI automatically and semi-automatically remedies a host of issues at scale that would make developers run for the hills, including:

- Pinpointing and getting rid of dead or non-functional code

- Merging and consolidating duplicate code and data

- Improving design of code and data

- Eliminating system flaws from transformed software

"TSRI's refactoring process creates reusable components that can be applied to future projects"

And beyond those cleaning and refining functions, a well-designed refactoring process also provides forward-looking advantages. TSRI’s refactoring process improves maintainability, remediates security vulnerabilities, increases performance, and modularizes functionality. It also creates reusable components that can be applied to future projects for optimization, packaging, and redistribution.

When you’re able to reuse some or all of the outputs of automated or semi-automated refactoring, you don’t have to recreate the mechanisms for modern microservices, REST calls, and other reusable elements. They’re at your fingertips and ready for integration in modern environments or databases for future projects. It gives you the best of modularity, but customized and created specifically for your systems’ needs such as data-dictionaries, code and record consolidation, introduction of logging or comments, and more.

"It gives you the best of modularity, but customized and created specifically for your systems' needs."

One scan, one rule, and thousands of fixes

A key part of the refactoring process is scanning the newly modernized code to find issues for remediation. To do this, we use the SonarQube scanning tool. SonarQube is an open-source platform that executes continuous inspection of code quality in modernized code. It provides a detailed report of bugs, code smells, vulnerabilities, code duplications, and more. Once SonarQube has identified problems in the code the TSRI team can use the results to resolve the issues and improve code maintainability issues and security vulnerabilities.

This is where the economy of rules comes in. Once SonarQube has pointed out issues across thousands of lines of code, TSRI uses that intel to identify the types of issues that need to be addressed. When an issue appears once in an application, it often appears hundreds or thousands of times, and a single rule applied across all code can eliminate a host of individual instances.

So how did we get to those 12,500 hours we started with? We didn’t just make it up. In a TSRI project for Deutsche Bank, a single rule created based on a scan of the code fixed about 25,000 instances that issue. SonarQube estimated it would take 30 minutes to fix each instance. That means refactoring automated the remediation process and saved them about 12,500 hours of software development time. That’s a lot of Marks.

So how did we get to those 12,500 hours we started with? We didn’t just make it up. In a TSRI project for Deutsche Bank, a single rule created based on a scan of the code fixed about 25,000 instances that issue. SonarQube estimated it would take 30 minutes to fix each instance. That means refactoring automated the remediation process and saved them about 12,500 hours of software development time. That’s a lot of Marks.

The proof is in the work. Refactoring can not only save thousands of coder hours, but a combination of code scanning and refactoring can also uplevel your modernization with:

- Maintainability making it easier to update and manage code going forward

- Readability for modern developers to find and improve the functions they need more easily

- Security by increasing the speed with which security issues can be found and remediated either manually or through refactoring rules

- Performance greatly increasing the efficiency of the application—for instance, enabling multiple services to run in parallel rather than sequentially.

Find out what refactoring done right can do for you, contact TSRI now

----

Proven by decades of results. Prove it for yourself.

For decades, TSRI clients have been discovering a dramatically faster, more accurate, and less expensive AI-based and automated modernization process. We’ve earned a place as the go-to resource for enterprise corporations, government, military, healthcare, and more. Now prove it for yourself. Find out how the proprietary TSRI modernization process delivers future-ready, cloud-based code in any modern language in a fraction of the time.

See Case Studies

Learn About Our Technology

Get Started on Your Modernization Journey Today!

- coderefactoring

- SonarQube

- softwaremodernization

- mainframe

- Refactoring

- documentation

- migration

- modernizationjourney

- technology

- whymodernize

- productivity

- it

- infrastructure

- revolution

- software

- modernization

- Microservices

- cloud

- cloudcomputing

- devops

- continuousmodernization

- mainframemodernization

- automated

- automatedrefactoring

Give Ada embedded code a new lease on life

Isn’t it time to give Ada a break?

The coding language’s namesake, Ada Lovelace, had a rough life. She began life as a pawn in an infamous aristocratic marital deathmatch. Her father, the famous poet Lord Byron, despised her, calling her an “instrument of torture.” Her mother deeply resented her, and pushed her into mathematics to spite her literary rockstar father.

But Ada thrived in the world of numbers. Working with Charles Babbage, generally considered the father of the computer, Ada first conceptualized the idea of a computer language, prophetically referring to Babbage’s difference engine as a “thinking machine.” She devised the first program to calculate Bernoulli numbers within the earliest mechanical computing devices.

“A new, a vast, and a powerful language is developed for the future use of analysis”

—Ada Lovelace

Just look at the difference engine and you can see that Ms. Lovelace worked at the intersection of computing software and hardware machinery. So it made sense that the US Department of Defense named its largest embedded coding project ever after her. The Ada language was released in 1977 as a high-level object-oriented language for use in real-time and embedded systems.

“If Ada fails, people die.”

Those real-time constraints have meant that Ada has to perform without error. It’s used in safety critical embedded systems for air traffic control, avionics, weapons control, global positioning navigation, and medical equipment. If Ada fails, people die.

Why break up with Ada?

Ada has served remarkably well over the years and still does. But as the nature scale of computing changes beyond even Ada Lovelace’s wildest imaginings, her namesake needs updating to the cloud age. The disadvantages are starting to tell:

![]() Ada coders are rare—and expensive

Ada coders are rare—and expensive

Ada is less common than Java, and the ratio of Java programmers to Ada programmers is now about 20:1. That relative scarcity has also driven up the cost of hiring Ada coders.

![]()

Ada is less integration-friendly

Most Java and C# installations are not easy to integrate with Ada applications, taking more of those expensive Ada coder hours to implement and maintain.

![]()

Ada compilers are getting harder to find

With the decline in use comes a decline in vendors. The increasing scarcity of compilers will drive up costs while reducing sources of innovation.

![]()

Ada’s licensing future is uncertain

As more and more systems modernize, Ada licensing will change. And there’s no indication that it will get easier or less expensive. As Ada becomes more and more a “collector’s item,” its price will probably rise.

![]()

The libraries are old and aging

Sure, once an Ada library is running it requires less maintenance, but its libraries tend to be old and, as a result of that lack of required maintenance, not updated. (i.e., “If it ain’t broke, don’t fix it.”) This presents a risk.

Eventually, Ada will be so far behind that the costs of running it will outweigh the benefits of keeping it performing as flawlessly as necessary. But often it’s hard to see the additional cost of Ada coders, missed innovation opportunities, creeping licensing costs, and higher risk until something becomes urgent. The best approach is to take care of it sooner rather than waiting for a crisis.

Giving Ada a second—and better—life

So how do you continue—and improve on—Ada’s outstanding track record, while clearing the way for the fast-evolving needs and future of modern computing? And how do you do it all without disrupting critical the safety of air traffic, the protection of warfighters, or the pinpointing of GPS satellites? Well, if you’ve flown to Europe in the last 10 years, you’ve already experienced TSRI’s solution in action.

Over decades and through hundreds of modernization projects, TSRI has perfected an automated methodology that delivers full assurance, and greater speed at a fraction of the cost of manual modernization efforts.

Using AI and machine learning, TSRI’s proprietary JANUS Studio® automates 99.9X% of the modernization process, while maintaining (and improving) the exacting levels of performance Ada embedded systems demand. Code modernization projects that would have taken years now take months—or weeks—and can save over 90% on post-modernization code maintenance.

Speaking of thinking machines, JANUS Studio® learns and evolves with every line of code it sees and transforms. For each modernization, its unique modeling format takes advantage of the over 200 million lines of code it has transformed for higher and higher speed and accuracy. That means faster, continually more assured, and less costly modernization for your Ada code.

TSRI’s near 100%-automated transformation technology accurately, quickly, and cost-effectively transforms legacy code, underlying databases, and user interfaces into multi-tiered modern environments. Then, to achieve unrivaled accuracy, it documents and re-factors the modernized application using the same fully automated technology and model-driven iterative approach.

If you think this is something for future consideration, it’s not. Action is going to be needed—and soon. In the coming months, government agencies and other organizations will need to respond to the newly-introduced Legacy IT Reduction Bill introduced recently by John Cornyn, (R-TX) and Maggie Hansen (D-NH). It mandates modernization and calls for specific steps to be taken right away on passage to show progress. So, even it feels like Ada is still doing its job, it’s time to start thinking about showing her the door.

Take a look at some of the major clients whose Ada code has gotten a second, modernized life through TSRI:

Eurocat Air Traffic Management System (TopSky) tracks commercial aircraft over 19 European countries and Australia. Transformation of Ada to both Java and C++ with improved performance and maintainability.

The Canadian Armed Forces Crypto Material Management System (CMMS) handles the reception, distribution and control of NATO cryptographic material. Conversion of Ada to C# at 100% automation.

GEMS (Geospatial Embedded Mapping Software) provides real-time ground proximity information for the B52, F15 and other aircraft. 100% successful conversion with unit testing that met and exceeded military avionics standards.

The Navy’s P-3C Orion aircraft acoustic signal processor, which receives and analyzes sonobuoy data for maritime patrol and reconnaissance. Modernized Ada code for 100% compatibility with and provided error-free linkages to the targeted platform in C++.

Get ahead of mandated modernization, contact TSRI now.

---

TSRI is Here for You

TSRI brings software applications into the future quickly, accurately, and efficiently with low risk and minimal business disruption, accomplishing in months what would otherwise take years. As a leading provider of software modernization services, TSRI enables technology readiness for the cloud and other modern architecture environments.

See Case Studies

Learn About Our Technology

Get Started on Your Modernization Journey Today!

How to Achieve 99.9X% Automated Transformation & Refactoring with the Intermediate Object Model (IOM)

![]() How much automation actually makes a difference?

How much automation actually makes a difference?

10% of 10 million lines of code is still 1 million lines.

A major modernization project involving millions or tens of millions of lines of code can take years and cost millions of dollars. Using artificial intelligence and modern computing method and automation, much of that time and expense can be eliminated. The question is, by how much? If your project starts out at 10 million lines of code and your automation technique cuts that down by 90%, you’re still left with a 1-million-line project that can take months to manually assess, document, transform, and refactor.

To appreciably accelerate large modernization projects, automation must eliminate as near as possible to 100% of the effort. That means in the range of 99.9X%. Without that, your organization can be left with significant and costly manual loads that can take years, strain budgets, result in unacceptable error rates, and still produce poorly written modern code. Even advanced automation cannot take code straight from any source language to a modern cloud-ready language. First, the code must be translated through a universally accepted language modeling system and standard to maximize the extensibility and efficiency of application analysis, transformation, and refactoring—an Intermediate Object Model (IOM). This is one of the keys to accelerating modernization that The Software Revolution Inc. (TSRI) has implemented for their clients.

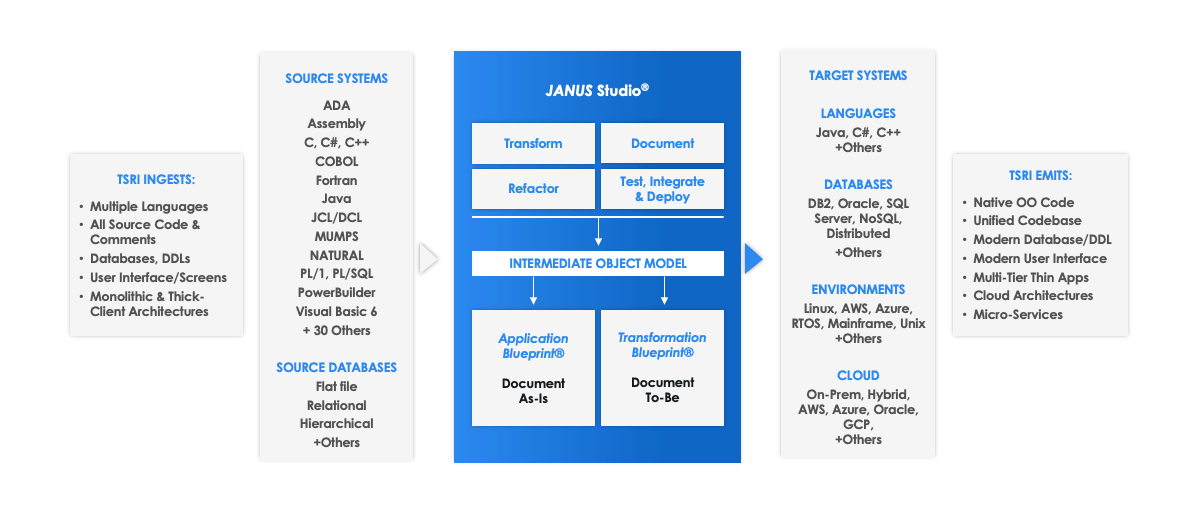

The languages used for specifying grammars and transformations must fit the specific problems at hand and be as expressive and efficient as possible. TSRI has developed and defined three domain-specific high-level specification languages JPGEN™, JTGEN™ and JRGEN™:

-

JPGEN™ defines grammar system and language models

-

JTGEN™ delineates transformations between these models

-

JRGEN™, a 5th generation artificial intelligence language supports first order logic and predicate calculus as well as 3GL and 4GL language constructs

Together, these three proprietary tools comprise TSRI’s JANUS Studio®.

IOM: A Literally Exponential Improvement in Transformation

The three components of JANUS Studio® transform and compile software code originally developed in language such as Ada, Visual Basic, Vax Basic, C, C++, COBOL, C#, Java, Jovial, FORTRAN, and more than 30 other languages into efficient, platform-neutral C++. The core function of the IOM creates a language-neutral model into which all legacy source languages are transformed, and from which all modernized target languages can be generated. The IOM is effectively a universal translator. It simplifies the “O(n-squared)” language transformation problem to a much simpler “O(n+1)” language transformation problem. The IOM provides a set of generic language constructs that serve as a reusable language-neutral formalism for assessment, documentation, transformation, refactoring, and web-enablement.

This solution allows simple 64-bit multi-processor LINUX platforms to analyze massive software models using parallel processing.

Using IOM, and the expertise of TSRI’s exceptionally skilled developers, JANUS Studio® is simply the most powerful, least expensive, and lowest risk technology available to automatically modernize (assess, document, transform, refactor, and web-enable) legacy systems. Using the AI-enhanced capabilities of JANUS Studio®, 99.9X% of software transformation can be automated, cutting transformation project times from years to months—or weeks.

"Simply the most powerful, least expensive and lowest risk technology available to automatically assess, document, transform, refactor, and web-enable legacy systems.”

A Model for Automated Modernization

As Scale Increases, So Does Efficiency

JANUS Studio® allows TSRI developers to cut modernization projects so drastically using Artificial Intelligence-based modeling that drives that 99.9X% automation rate. Not only that, but because every application and every language is modernized and transformed at the meta-model stage, every prior project (regardless of the language or industry) further develops the toolset and TSRI libraries, resulting in greater value for each future project. The more scale, the more uses, the more efficient the process.

This email address is being protected from spambots. You need JavaScript enabled to view it.

It’s no surprise that TSRI adheres to the Object Management Group (OMG) principles of model-based and architecture-driven software modernization. We’re not just a member, TSRI is the principal author of the Abstract Syntax Tree Meta-Modelling Standard used by the OMG. We’re deeply involved in the innovations and future of OMG and look forward to the next Member Meeting March 21-25 in Reston, Virginia. If you plan to be there, we’d love to talk about how to significantly accelerate your modernization projects, while lowering cost and error rates.

This email address is being protected from spambots. You need JavaScript enabled to view it.

---

TSRI is Here for You

As a leading provider of software modernization services, TSRI enables technology readiness for the cloud and other modern architecture environments. We bring software applications into the future quickly, accurately, and efficiently with low risk and minimal business disruption, accomplishing in months what would otherwise take years.

See Case Studies

Learn About Our Technology

Get Started on Your Modernization Journey Today!

Modernizing Your PL/1 Applications Preserves Functionality While Extending the System’s Lifespan

PL/1 - Life in COBOL's Shadow

When IBM developed the framework for its PL/1 computing language in the 1960s, the committee of business and scientific programmers that developed the parameters for the language could not have foreseen today’s modern technologies of a phone in every pocket and digital access to anyone on the planet via the internet. They also may have hoped for, but likely did not foresee, how a mainframe language and architecture that survived 60 years would still be in use today.

Though PL/1 never reached the level of popularity of its COBOL competitor, it was still used on multiple government, corporate and defense mainframe systems throughout the years. It is, by many accounts, a very stable and reliable language—it even had more features in some areas than COBOL! However, programmers and organizations had little business or technical incentive to adopt the language. Today, having lived for so long in the shadow of COBOL and Fortran, finding programmers with PL/1 experience has become harder each year making it increasingly difficult to maintain any remaining mainframe applications. Those challenges have made PL/1 a desirable candidate to transform into a modern language like C++, C#, or Java.

Modernizing PL/1 - Success is in the Details

When it comes to automated modernization and refactoring, a successful outcome lies in the details. For example, TSRI engineers spend a lot of time prior to transforming any code understanding how a step-wise process could allow a critical system to operate continuously without any disruption or data loss. Going step-wise means that some components of the system such as the login function or other application program interfaces can be modernized, tested, and, if necessary, reverted to the original application without any impact on the rest of the application or the end user. Because an automated modernization creates a like-for-like replica of the functionality in the target language—in this case C# in a Windows environment—the successful migration of each step means that subsequent transformations will be easier because the automated transformation engine continues to learn and adjust.

Following the transformation of PL/1 to the modern computing environment, an automated, iterative refactoring process can find redundant, dead, or unused code and remediate while making the entire codebase more efficient. PL/1 was designed to facilitate programming using human-readable logic so automated refactoring can also reduce code overhead significantly. Furthermore, the transformation from the monolithic environment of early mainframe languages to a multi-tier environment separates the programming from the data and the user interface enabling further functional development while preventing system disruption.

PL/1 is not unique in how the architectures that run this legacy language can be modernized but given the age of the language and lack of business or programmer support, undergoing an automated modernization may be the best way to transform PL/1 applications to modern languages and architectures.

-----

TSRI is Here for You

As a leading provider of software modernization services, TSRI enables technology readiness for the cloud and other modern architecture environments. We bring software applications into the future quickly, accurately, and efficiently with low risk and minimal business disruption, accomplishing in months what would otherwise take years.

Get started on your modernization journey today!